Greetings

Sorry for the long hiatus in posting. I had no time to create anything, what with all final exams of grade 11, and didn't have my kits for the entirety of the summer vacations. In any case, I ma back now.

A technology that has now interested me is Arduino. Arduino is an open-source electronic prototyping platform, which comes with an AVR ATmega328 microprocessor, 14 digital input/output pins (of which 6 can be used as PWM outputs), 6 analog inputs, a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button.The micro controller can be programmed using the Arduino programming language, which is based on Wiring. Wiring is pretty much C/C++, but with two mandatory functions that have to be defied to run the program: loop() and run().

Once I got my hands on an Arduino, I immediately borrowed a seven-segment-display, which has 6 input pins and 2 ground pins. After connecting these input pins with six digital input/output pins on the board, I connected the two round pins to the ground on the Arduino. Then I got a simple push button, and connected it to +5V., ground and one of the input/output pin on the board. So, whenever I pressed the button, +5V would be sent to the digital input/output pin, which I could detect.

Then I created a simple program, which would define the state of the LEDs in the seven segment display, to display a certain digit. For example, for the digit 1, the state would be defined as

And here is the fully annotated code. You will need the Arduino software to compile it.

Link to the code (.pde file)

This is not the end.

Sorry for the long hiatus in posting. I had no time to create anything, what with all final exams of grade 11, and didn't have my kits for the entirety of the summer vacations. In any case, I ma back now.

A technology that has now interested me is Arduino. Arduino is an open-source electronic prototyping platform, which comes with an AVR ATmega328 microprocessor, 14 digital input/output pins (of which 6 can be used as PWM outputs), 6 analog inputs, a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button.The micro controller can be programmed using the Arduino programming language, which is based on Wiring. Wiring is pretty much C/C++, but with two mandatory functions that have to be defied to run the program: loop() and run().

Once I got my hands on an Arduino, I immediately borrowed a seven-segment-display, which has 6 input pins and 2 ground pins. After connecting these input pins with six digital input/output pins on the board, I connected the two round pins to the ground on the Arduino. Then I got a simple push button, and connected it to +5V., ground and one of the input/output pin on the board. So, whenever I pressed the button, +5V would be sent to the digital input/output pin, which I could detect.

Then I created a simple program, which would define the state of the LEDs in the seven segment display, to display a certain digit. For example, for the digit 1, the state would be defined as

0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0

which means that only 2 leads from the seven segment displays must be give power and in this case it is leads 4 and 6. There is also code in the program to increment numbers by and roll back to 0, when he number is 9, whenever the button is pressed.

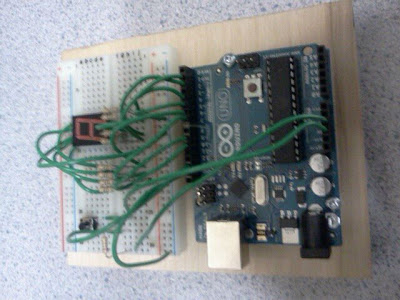

I don't have a schematic of my circuit, but I did manage to take some pics. Here you go:

|

| View from the top. Notice the 7 resistors. |

|

| Another view |

Link to the code (.pde file)

This is not the end.